|

Jaroslav Kĝivánek |

Efficient Caustic Rendering with Lightweight Photon Mapping

|

Pascal Grittmann

Saarland Univesity |

Arsene Pérard-Gayot

Saarland Univesity |

Philipp Slusallek

DFKI Saarland Univesity |

Jaroslav Kĝivánek

Charles University, Prague |

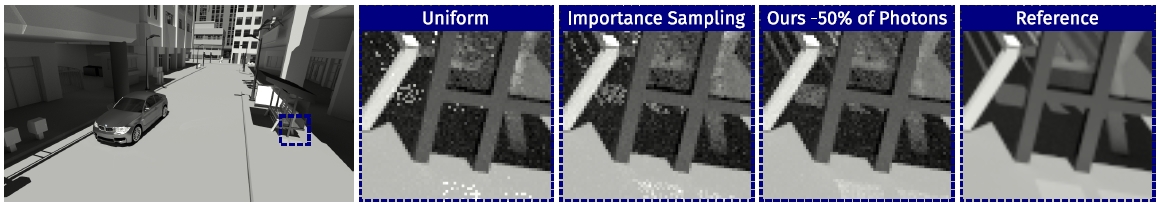

Different photon emission strategies in the CAR scene. We achieve a significantly better photon density inside caustics with fewer

photons. The emission directions and the number of light paths (and thus photons) are optimized automatically by our method.

Abstract

Robust and efficient rendering of complex lighting effects, such as caustics, remains a challenging task. While algorithms like

vertex connection and merging can render such effects robustly, their significant overhead over a simple path tracer is not

always justified and as we show in this paper also not necessary. In current rendering solutions, caustics often require the

user to enable a specialized algorithm, usually a photon mapper, and hand-tune its parameters. But even with carefully chosen

parameters, photon mapping may still trace many photons that the path tracer could sample well enough, or, even worse, that

are not visible at all.

Our goal is robust, yet lightweight, caustics rendering. To that end, we propose a technique to identify and focus computation on

the photon paths that offer significant variance reduction over samples from a path tracer.We apply this technique in a rendering

solution combining path tracing and photon mapping. The photon emission is automatically guided towards regions where the

photons are useful, i.e., provide substantial variance reduction for the currently rendered image. Our method achieves better

photon densities with fewer light paths (and thus photons) than emission guiding approaches based on visual importance.

In addition, we automatically determine an appropriate number of photons for a given

Reference

Pascal Grittmann, Arsene Pérard-Gayot, Philipp Slusallek, and Jaroslav Kĝivánek.

Efficient Caustic Rendering with Lightweight Photon Mapping.

Computer Graphics Forum (Proceedings of the 29th Eurographics Symposium on Rendering), 37(4): 133-142, 2018

DOI | BibTeX

Links and Downloads

| paper fulltext |

|

| pdf (46 MB) |

Acknowledgments

This work was supported by the German Research Foundation (DFG): SFB 1233, Robust Vision: Inference Principles and Neural Mechanisms, TP 2. It received further funding from the European Unions Horizon 2020 research and innovation program, under the Marie Sk³odowska-Curie grant agreement No 642841 (DISTRO) and was supported by the Czech Science Foundation grant 16-18964S and the Charles University grant SVV-2017-260452.